AI SaaS: Collaborative Features for RFP Teams

Providing a comprehensive suite of tools to better facilitate cross-team collaboration during RFP response flows using AI assistance.

CEO and COO

Engineering Team (3)

Figma: Low to High-Fidelity Mockups, Prototyping

Miro: Ideation & Synthesizing User Insights

PostHog: Analyzing User Metrics & Behavior

Maze: User Testing

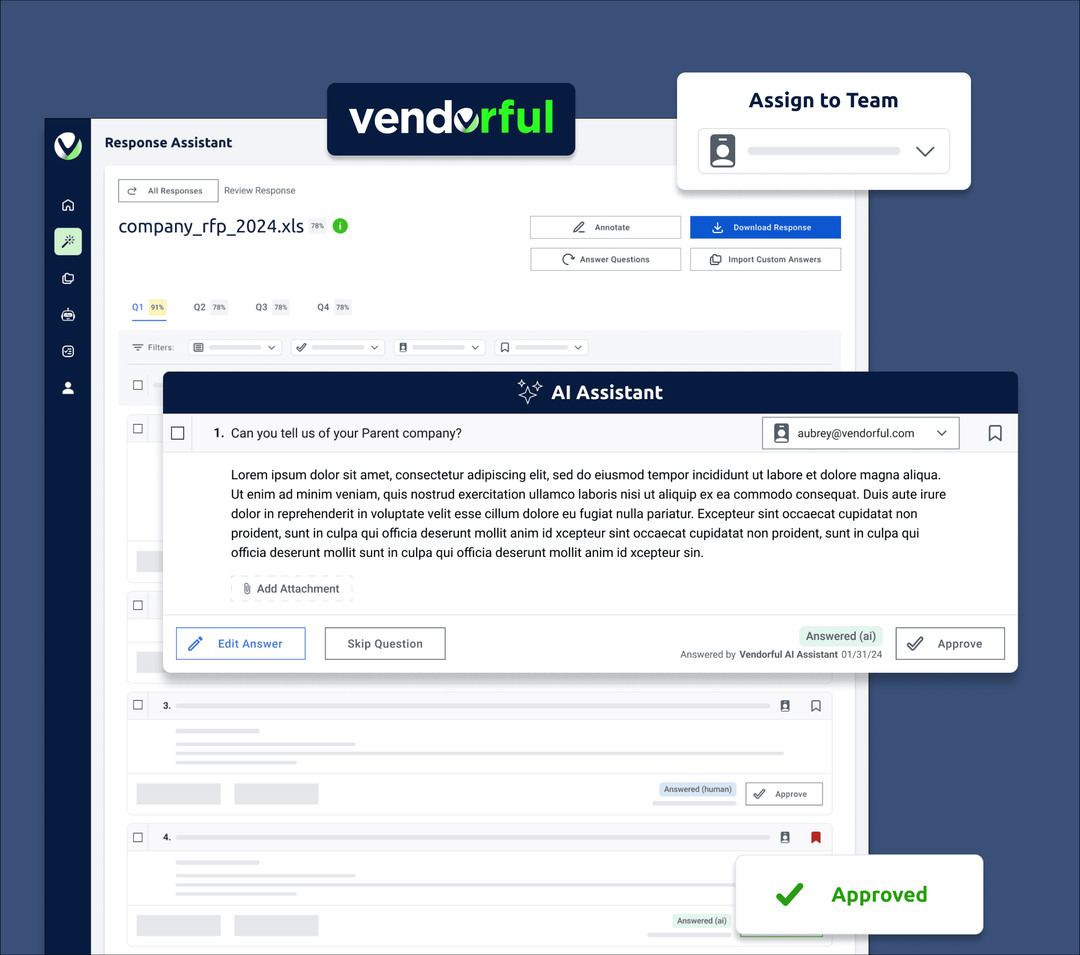

The collaborative feature set is meant to resolve the main pain point for remote proposal teams of syncing on response answers generated by Vendorful’s AI Assistant for their RFPs.

As a human-centered designer, championing the design process and prioritizing user experience within our team commences at the conceptual stage, especially as we aim to introduce new collaborative features to our AI assisted response review. With these features encompassing multiple components, it was imperative that they addressed our customers' pain points without introducing unnecessary complexity. Additionally, ensuring modularity was key, allowing seamless integration into other flows and screens featuring similar functionalities. This phase also presents a valuable opportunity to gain insights from our current software users, who have been vocal about the need for collaborative features to alleviate various pain points.

Before delving into outlining our Sprint, we conducted separate meetings with two of our business customers, whom we consider our design partners, given the early stage of RFP response product development. This allowed us to cultivate trust with our customers, who were eager to provide feedback and invest time to ensure the software aligns closely with their team's needs and workflows.

These conversations proved enlightening and aided me, as a user-centric designer, in gaining a deeper understanding of their internal workflows. Both customers share similarities, with business development teams comprising multiple members working remotely or in a hybrid capacity. They require a collaborative platform that facilitates efficient communication and simultaneous work on individual response questions. This entails accommodating varying levels of expertise among team members into the responses, ensuring visibility of progress, approval and status updates for each answer as a task.

Based on our meetings with our design partners, I iterated on a customer problem statement for the team to align on and use as a guide for our collaborative features Sprint. Then we worked cross-functionally to discuss how to best provide a solution to the problem statement by ideating on the collaborative feature set, and raising any concerns to technical constraints from engineers.

I compiled collaborative features based on discussions both internally and with design partner feedback. I organized these features into categories and facilitated a workshop for the team to collectively assess their ROI and alignment with business and user needs. These exercises informed our phased approach and prioritized the most valuable collaborative features for the development roadmap.

Sometimes, the most effective approach to kickstarting a creative project is by immediately putting ideas down on paper. Paper provides a low-stakes environment for rapid design iteration, so that’s where I began.

In developing this collaborative feature set, I initially focused on its modularity. I envisioned these features being displayed both collectively on a review screen and individually as pages, each hosting a single Q/A pair. This setup would facilitate easy assignment of Q/A pairs to individual team members.

During my brainstorming, I decided to depart from the table design we had used previously after discussions with the Lead Engineer. Instead, I conceptualized the Q/A pair as a component, akin to a card, with critical actions and statuses attached. This approach allowed for greater visibility of user actions compared to our initial version of the response flow, where many actions were nested within the "edit answer" function.

Moreover, I wanted to streamline the process of assigning and approving Q/A pairs. I envisioned users being able to scroll down the page and quickly click through a neat column of actions to assign or approve Q/A pairs individually. Alternatively, they could easily select multiple Q/A pairs and execute bulk actions, with clear visibility into which cards were selected.

In my iteration process, rapid prototyping plays a vital role in validating designs and gathering user feedback. Leveraging the existing design groundwork from previous versions of the response review and manage answer screens, I transitioned directly from paper prototypes to a mid-fidelity stage. This approach was efficient as it allowed me to integrate numerous familiar UI components seamlessly.

I designed comprehensive flows for collaborative team response review, incorporating features such as user assignment, approval, bulk actions, and filters. Using Figma, I created prototypes which were then deployed on Maze's platform for user testing and feedback with our design partners, and for internal team review.

The feedback we received played a pivotal role in shaping the next design iteration. Here, I outline the key changes informed by testing for both the Q/A component and the response review screen. With the updates made to the response review screen, it was important to align the UI components of the Manage Answer screen accordingly. This not only ensures consistency and familiarity within the software's interface but also streamlines the process for engineers by enabling them to efficiently reuse components.

User Feedback & Insights

1.) This is the component the user will be interacting with most when getting the information they need on the response item, as well for performing collaborative functions such as assigning or approving an answer if assigned.

2.) “When approving, I want to see quickly who last updated.”

3.) “Assigning a teammember and approving an answer are separate workflows.”

4.) “Seems like Editing Answer is the most important action, and I don’t recognize that I can assign someone else.”

5.) “I think approval makes sense with the answer vs. the question. I want to go through the process quickly by reviewing the answer, making edits or assigning if needed, and then approving.”

Iteration Improvements

1.) I took into account the user feedback interacting with the mid-fidelity prototype to inform the high-fidelity design of the component.

2.) Critical actions pertaining to collaboration, such as approving, assigning, or bookmarking, lived on the right hand side.

3.) Answer status was moved near approval status, since users stated they wanted to be able to know this at the same time they are approving.

4.) Assignment was brought to the question level, as this was important to assign expertise based on expertise in their org for certain types of questions. It was also a less prominent CTA.

5.) Editing answer CTA made less prominent as to not distract from the question/answer content. Yet still a primary CTA for the component.

6.) Based on UI improvements, card size was now smaller height, from, helpful for having a list of many Q/A cards on a page.

User Feedback & Insights

1.) “There are a lot of actions to digest on one page.”

2.) “I will need to scroll for a while to go through this answer set.”

3.) It seems like there is a lot of potential to collaborate with my team here.”

4.) “Filters to get more granular with the info will be very helpful.”

5.) When user testing, I discovered that users were regularly crossing their data from one card to the next. Although this might be good for engagement type UI, this is not good for administrative UI-when the users’ need to review a lot of information quickly.

6.) There’s a lot of information, but design needs to be more tactical as to how it will function and the users will collaborate and filter through it.

Iteration Improvements

1.) I took into account the user feedback interacting with the mid-fidelity prototype to inform the high-fidelity design of this page.

2.) Reviewing page went from a zig-zag pattern view to two vertical columns that each had a different function.

3.) Left hand side, is for viewing and editing the answer.

4.) Right hand side, is for critical actions pertaining to collaboration, such as approving, assigning, or bookmarking, lived on the right hand side. User could scroll through the page and know right away if they or someone else needs to approve an answer.

5.) CTAs made less prominent in order for the users to more quickly review this page and get the information they need.

6.) Based on UI improvements to cards, more cards can fit on page in less space.

7.) Filters and Bulk actions apparent and provide granularity for reviewing use cases.

I met with the team for a comprehensive review of the design and user flows, ensuring alignment across design, development, and management before developer implementation. Following some minor updates, I delivered high-fidelity designs, user flows, and detailed notes to the development team for the integration of collaborative features.

Please refer below for a more comprehensive breakdown and detailed views views of the feature set that integrated into Vendorful.ai's RFP software, implemented in production.

Our collaborative features successfully launched in April 2024. My current focus now is on gathering user feedback, analyzing metrics, and deriving insights to assess the performance of this feature set.

To further evaluate the success of our feature launch, the following next steps are outlined:

User Feedback Collection: Conduct user surveys to gather qualitative feedback on the collaborative features. Schedule user interviews to gain deeper insights into user experiences and preferences.

Metric Analysis: Analyze quantitative data from analytics tools to identify usage patterns and user behaviors, engagement and task completion times to track KPIs. Compare metrics against pre-launch benchmarks to assess the impact of the new features.

Insights Formation: Synthesize user feedback and metric data to identify strengths, weaknesses, and areas for improvement. Collaborate with cross-functional teams to interpret findings and prioritize action items for future feature development.

Continuous Improvement: Iterate on the feature set based on user feedback and performance insights.Implement updates and enhancements to address user needs and optimize user experience.

If you found my work intriguing, don't hesitate to reach out—I'm actively exploring new opportunities and would welcome the chance to connect further!